We Make AI Shine

NeuReality is reimagining AI infrastructure to eliminate system bottlenecks and unlock the full potential of GPUs

Released from the shackles of legacy architectures, we prioritize Infrastructure Cost and Energy Efficiency and End User Experiences, Transforming AI from a promising technology into Practical and Impactful Business Value.

Mohamed Awad

General Manager of the

Infrastructure Business, ARM

Partners

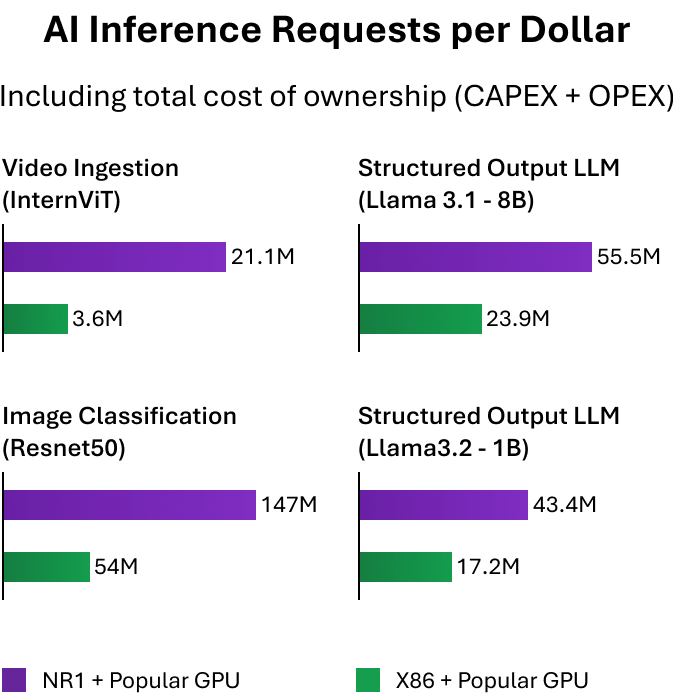

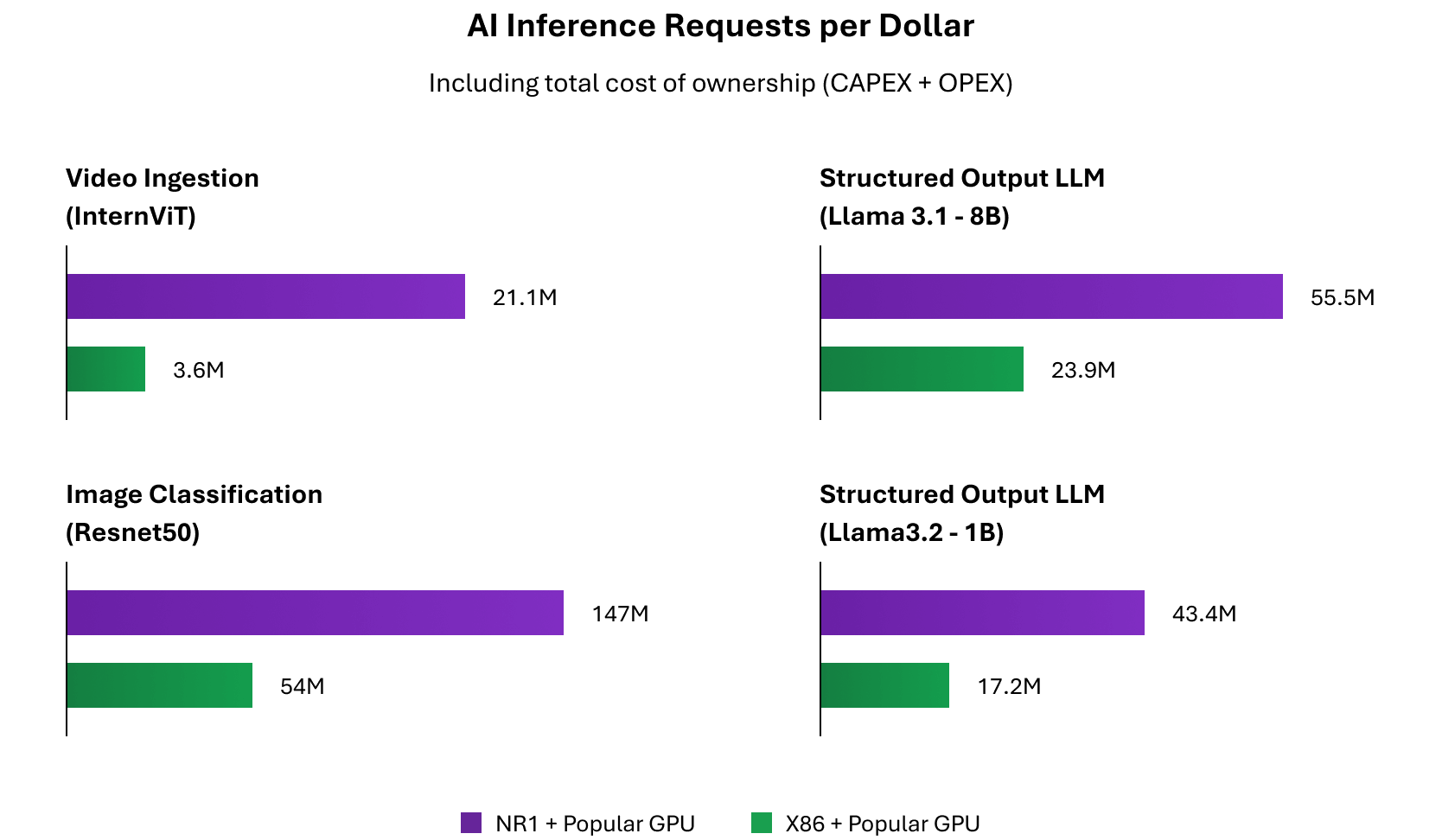

Cost efficiency across every Model and Modality

Other CPU reliant systems are limiting GPU output.

NeuReality boost GPU output to its full potential,

while reducing system cost and energy overheads,

achieving best system performance per dollar

Al Inference Solution

Al Inference Solution

Inference Appliance

Purpose built for AI Inference

- Doubles GPU Utilization for optimal efficiency

- Preloaded with generative and agentic AI-Models

for TTM

First server purpose-built for AI inference marrying software and hardware

- Doubles average GPU utilization to nearly ~100% vs traditional CPU-reliant systems

- Comes pre-loaded with generative and agentic AI models for 3x faster time-to-value

Software

The Connective tissue of NR1 Architecture

- The intelligent orchestration layer built for

simplified AI deployment - Designed for seamless integration points

The connective tissue woven into the very fabric of NR1 architecture

- Co-designed from inception with the NR1 Chip for seamless integration points

- Co-designed from inception with the NR1 Chip for seamless integration points

AI-CPU

The first AI-CPU, Engineered for inference at scale

- Combines ARM based CPU with media

processors and orchestrated by AI-Hypervisor - Pairs with any GPU or alterative XPU

The first true AI-CPU engineered for inference at scale

- Combines compute, networking, orchestration, integrated media processors, and hardware-driven AI-Hypervisor IP on a single chip

- Pairs with any AI Accelerator – GPU, FPGA, ASIC – and any AI model

AI-SuperNIC

High performance Networking Engineered for AI Factories

- Seamless scale to giga-factories – 1.6 Tbps throughput, ultra-low latency, and UEC support for efficient growth at any scale

- Maximized GPU utilization – in-network compute offloads collectives, freeing GPUs to focus fully on AI workloads

High performance Networking Engineered for AI Factories

- Seamless scale to giga-factories – 1.6 Tbps throughput, ultra-low latency, and UEC support for efficient growth at any scale

- Maximized GPU utilization – in-network compute offloads collectives, freeing GPUs to focus fully on AI workloads

Join Us to Transform your AI Infrastructure

“Today, global AI adoption is only 42%, with US even lower at 33%” (Exploding Topics, May 2025)

We aim to remove barriers to deployment allowing you to scale your business with the power of AI faster

Unlock your GPUs

Our open vendor agnostic tech makes any GPU run faster

Our open vendor agnostic tech makes any accelerator, like GPU, run faster and work harder

Empower AI Models

With out of the box optimized models and backends for common AI Frameworks

Simplify complex AI infrastructure, so models deliver more and reach full potential with ease

Accelerate your AI adoption

To boost TTM and business value

By accelerating AI workloads, we boost business value and ROI