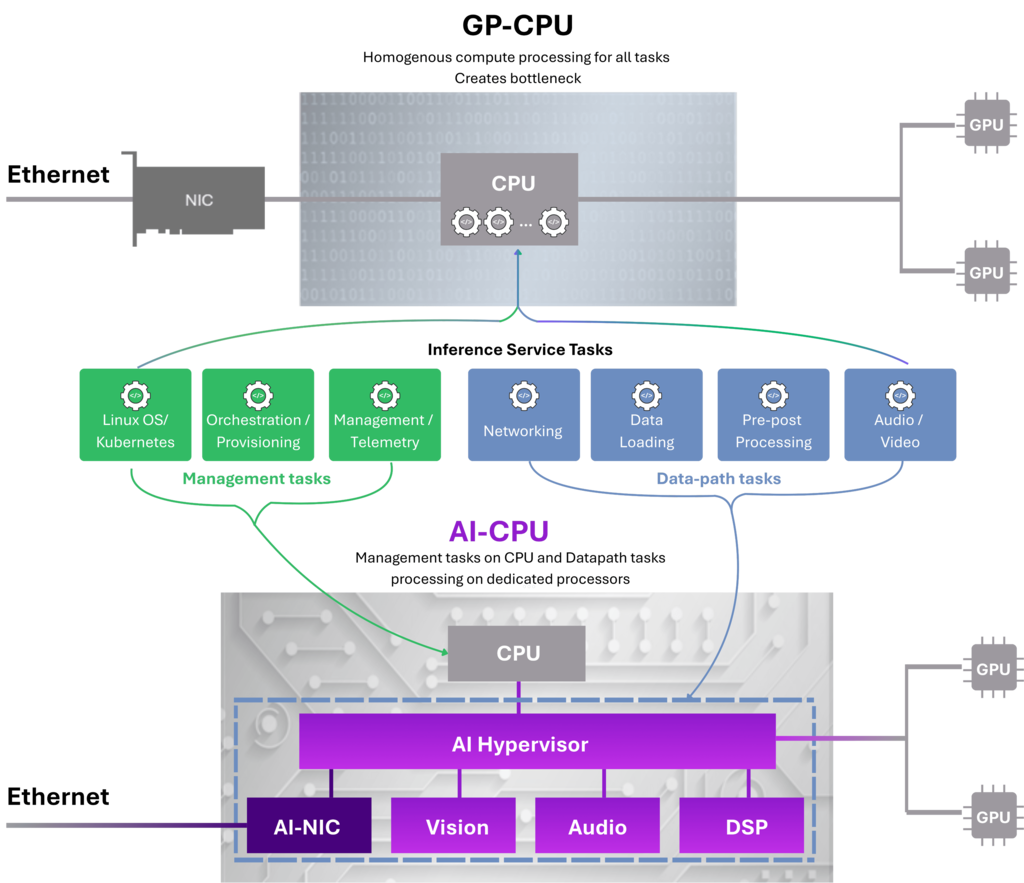

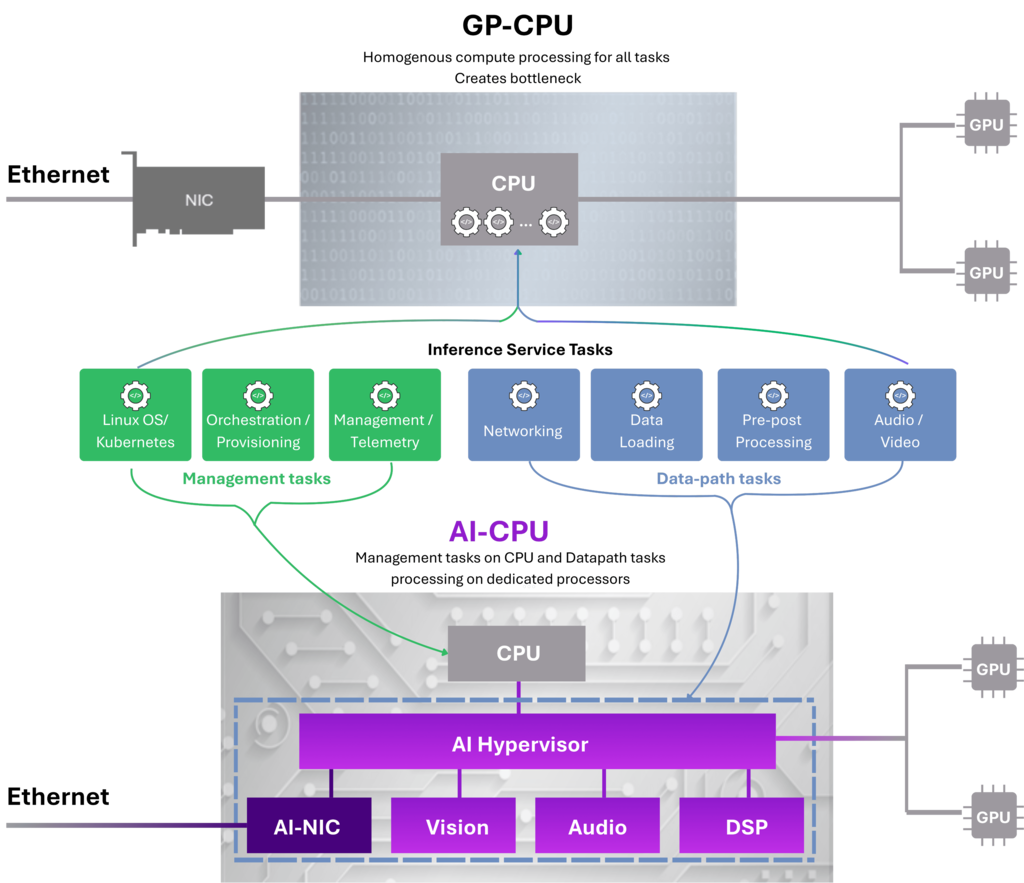

Saturates with Networking, Hardware control, and data processing tasks causing system bottlenecks and reduced output.

AI-CPU

Purpose-built for Inference AI Head-Nodes.

The NR1® Chip is the first AI-CPU – Creating perfect system harmony with any GPU to eliminate bottlenecks and achieve maximum GPU output.

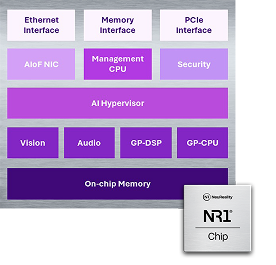

Engineered to transform your Inference-as-a-Service unit economics by solving the fundamental architectural bottleneck caused by dated general-purpose CPU and NIC technologies, NR1 AI-CPU is a Heterogeneous Compute server on a chip featuring enhanced video, audio, DSP, and CPU processors, with a hardware-based AI-Hypervisor that orchestrate the harmony between those processors and any attached GPU, all fused with an embedded AI-over-Fabric network engine. It delivers the best system performance-per-dollar

Benefits of NR1 Disruptive Technology

Drives GPU Utilization to it’s maximum

Up 10x more energy efficient than legacy CPU+ NIC

Up to 6x million tokens per dollar

Lowest total cost of ownership

Greater throughput, improved cost and energy efficiency

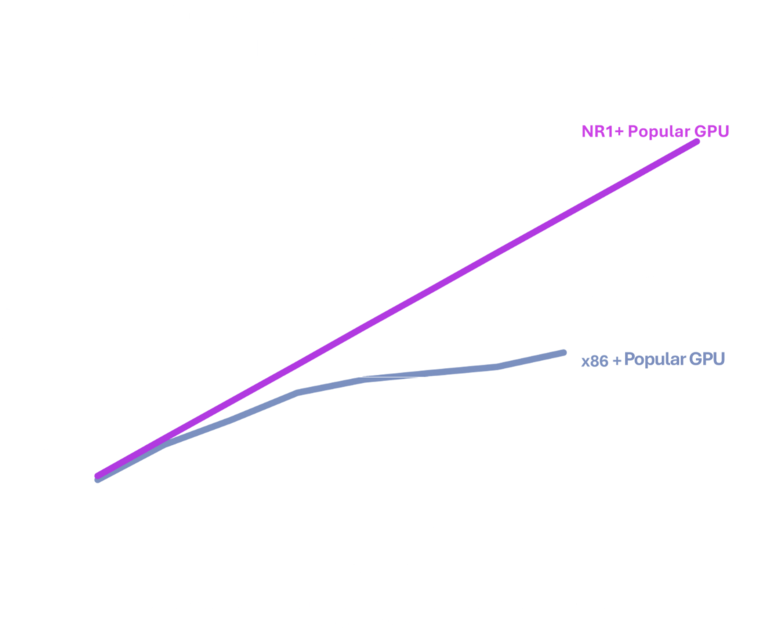

CPU Reliant System

AI-CPU Head Node

Offloads Networking, Hardware control, and Data processing to dedicated hardware achieving Fully Utilized GPUs

NR1 AI-CPU unleashes the true potential of GPUs

The NR1 Chip drives GPU utilization to its theoretical maximum, while increased GPU density in legacy x86 CPU-based AI servers results in significant utilization loss