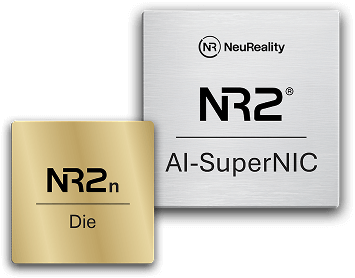

AI-SuperNIC

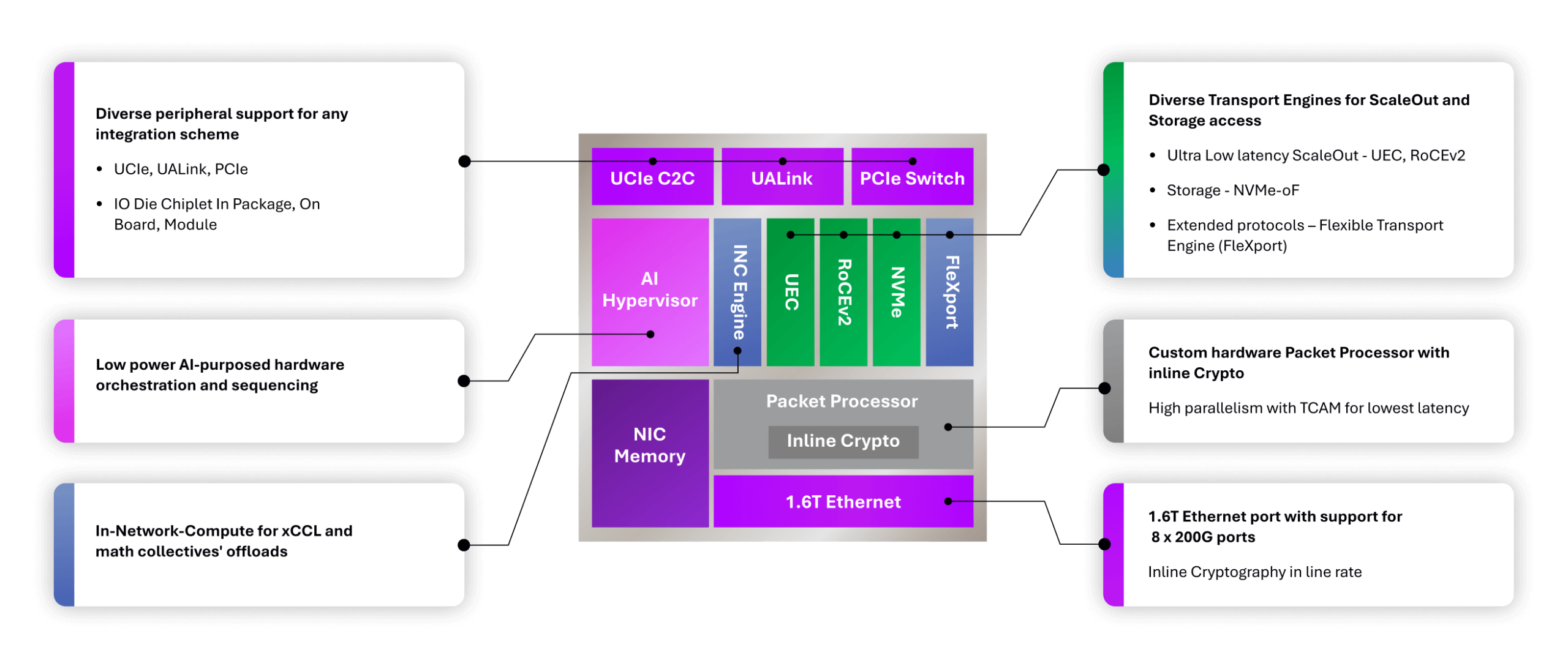

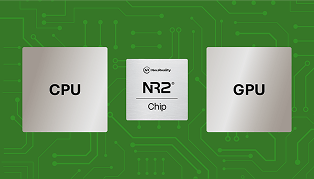

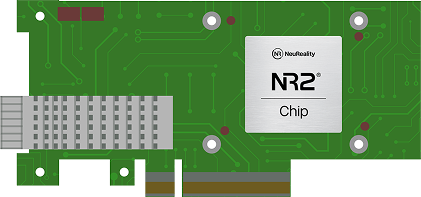

Purpose-built for GPU Scale-out in AI Training and Inference Gigafactories, the NR2 Chip is the first AI-SuperNIC designed to leap ahead in bandwidth and latency while integrating compute capabilities and deliver maximum system output.

Built on NeuReality’s Novel networking and compute technology introduced in the NR1, the NR2 AI-SuperNIC is engineered to boost your GPU utilization and natively integrate with xCCL libraries to support network collectives including math-collectives, enabling customers to leap ahead and support their rapid growth in demand for AI infrastructure whether for Training or Inference with unmatched efficiency and with any GPU or XPU they wish to use.

NR2 AI-SuperNIC Chip – Purpose Built for GPU ScaleOut

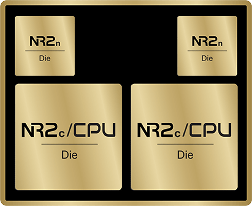

NR2n Built with Flexibility in Mind to Fit Your Integration Preference

-

Product

Die, Chip, PCIe Module

-

System Role

PCIe device, Switch, or Autonomous

-

Interfaces

PCIe, UCIe, ETH

-

Integration

In-package, On-board, PCI card

Network Uncorked.. GPUs Released!

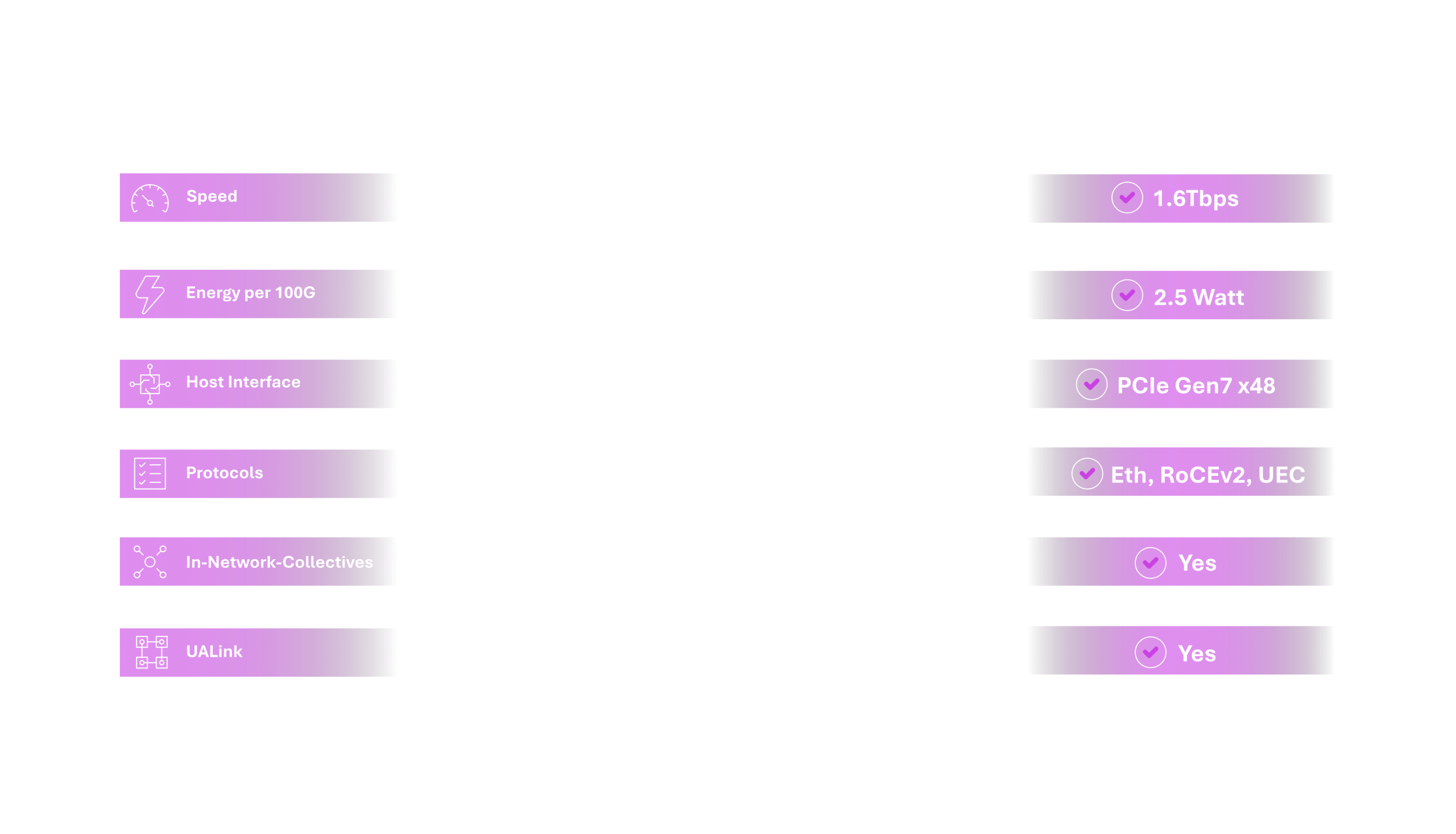

Leap ahead with Bandwidth and Latency, coupled with integrated compute, and delivered with best Cost and Energy efficiency, NR2 reduce your Training time and cost, and boost your Inference responsiveness and ROI

NR2n Integration Schemes

IO die for AI-CPU/DPU

- Die to Die: UCIe

- Chip peripherals: PCIe, Ethernet

AI-SuperNIC for AI Microserver module

- CPU and GPU connectivity: PCIe

- Module peripherals: Ethernet

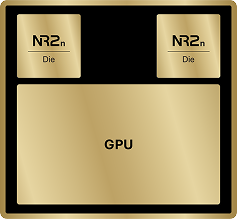

IO die for GPU/XPU

- Die to Die: UCIe

- Peripherals: PCIe, Ethernet

AI-SuperNIC module/card for OEM servers

- Module peripherals: QSFP Ethernet, PCIe Connector

- Chip Peripherals: PCIe, Ethernete