Software

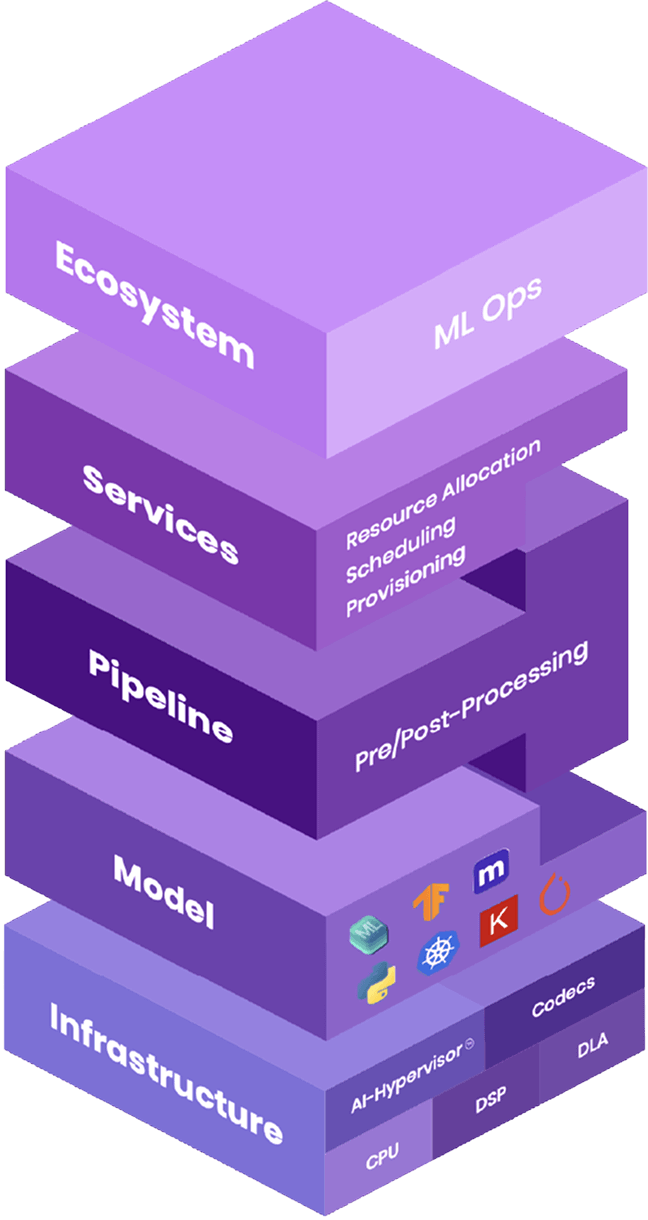

NeuReality’s software is the intelligent orchestration layer that transforms complex AI infrastructure into seamless deployment.

At NeuReality, Software isn’t an afterthought – it’s the connective tissue woven into the very fabric of our architecture. Designed alongside our NR1® Chip from inception, our full-stack software creates seamless integration points that orchestrate the entire AI inference journey, from development through deployment and Serving. While others in the industry focus solely on silicon or hardware, NeuReality’s software-enriched approach creates a complete solution that enables any business to experience high-performance AI inferencing faster – with far less overhead.

NR Software Makes Inference Easier and Faster to Deploy

Bridging the gap between AI eco system of Data scientists, ML engineers, and Devops and the underlying heterogenous hardware infrastructure, NeuReality had developed three layers of software, simplifying every stage from development to deployment and serving of AI pipelines

Model Layer

Built to develop, optimize, and deploy trained AI models leveraging GPU first and NR1’s DSP and CPU next as backup

Pipeline Layer

Built to optimize data pre-processing, post-processing, and other AI frameworks’ related computation

Service Layer

Built to Orchestrate, provision, and Serve deployed AI pipelines and models

-

Pre–loaded, Optimized Open-Source AI Models

-

ARM server class with standard Linux OS

-

Seamless storage connectivity to NFS and S3

-

Kubernetes management and orchestration

-

Purpose–built APIs, Tools and Runtime for Inference deployment

-

Integrated with common AI frameworks and MLOps backends